Episode 3: Deepfake - Stealing Faces

- ynkhlee

- Nov 13, 2025

- 5 min read

Updated: Nov 19, 2025

Prologue: March 16, 2022, Kyiv

Ukrainian President Zelensky. Or rather, someone wearing his face. [Source: BBC]

Three weeks into the Russian invasion. A Ukrainian news site was hacked.

The Zelensky in the video says: "Lay down your weapons. Surrender."

But this was not Zelensky.

Awkward voice intonation

Lip movements subtly misaligned

Suspiciously low image quality

Meta and Twitter removed it within hours. The Ukrainian government immediately denied it.

The first deepfake used as a weapon in information warfare during war.

What we learned from Episode 1: Photography proves "that-has-been." But now even faces are no longer evidence.

Part 1: What Is Deepfake? - When Faces Become Data

Deepfake = Deep Learning + Fake

Generative AI: Creates entirely new images Deepfake: Replaces only faces in existing videos

Not creation, but replacement. Not fabrication, but theft.

How It Works: GAN (Generative Adversarial Network)

One network → Generates fake faces Another network → Distinguishes real/fake

They compete and learn. Becoming increasingly sophisticated.

The mechanism:

Generator creates fake face

Discriminator judges: Real or Fake?

Generator improves based on feedback

Process repeats thousands of times

Result: Indistinguishable from reality

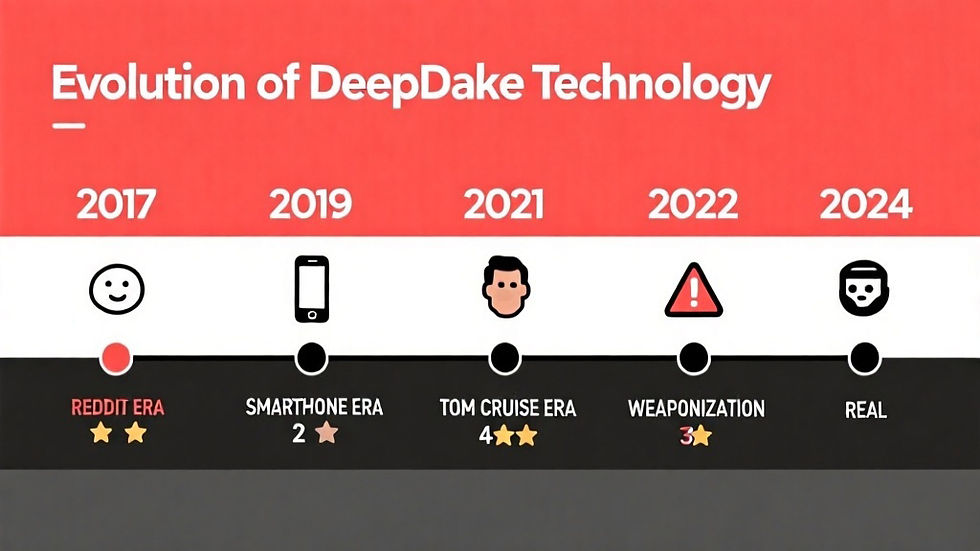

2017 Reddit debut → 2024 Everyday occurrence

Visual diagram showing the Generator-Discriminator competition loop, with INPUT (thousands of photos) → PROCESS (neural network training) → OUTPUT (synthetic face)

History of Evolution: From Crude to Undetectable

2017 Reddit Era:

Crude quality

Misaligned eyebrows

Obvious artifacts

Easily detected

2019 Smartphone Era:

FaceApp, Reface popularized

Consumer-friendly apps

Filter-like usage

Mass adoption begins

2021 Tom Cruise Moment:

TikTok deepfakes gain 11 million views

Even experts fooled

Professional-grade quality

Viral phenomenon

2022 Zelensky Case:

Weaponization in information warfare

Political impact

Global concern

Crisis point reached

2024 Present:

Real-time deepfakes possible

Instant generation

Indistinguishable from reality

The technology has matured

Timeline visualization showing quality progression from 2017 (★☆☆☆☆) to 2024 (★★★★★), with key milestones and characteristics at each stage

Question:

Is this merely manipulation? Or a philosophical question that asks us to reconsider the essence of photography?

Part 2: The Collapse of Indexicality - When Light No Longer Proves Reality

2024, Garosu-gil, Seoul: Same Space, Different Face

TAMBURINS store exterior wall. Large billboard.

2023: BLACKPINK Jennie 2024: Blonde Caucasian male model

This is not a deepfake. This is a real photograph of a real model.

But note the method of replacement:

Same building

Same location

Same composition

Only the face replaced

Faces Treated as Interchangeable Parts

The billboard shows:

Same space + Same lighting + Same composition = Only face replaced

The moment Jennie stood there vs the moment the blonde male stands there Are these different times? Or different versions of the same template?

Faces reduced to variables in an equation.

The Collapse of the Decisive Moment

Episode 1: Photography captures the 'decisive moment.' That moment is irreversible. Cannot be recreated.

But TAMBURINS shows the opposite.

If faces are interchangeable, If moments can be replicated, What remains of the 'decisive moment'?

Roland Barthes and Indexicality

Roland Barthes: "Photography proves 'that-has-been.'"

The traditional chain: Physical reality → Light → Lens → Film → Chemical trace → Photograph

This is indexicality. A direct, causal connection between reality and image.

Deepfake Reverses This Logic

The figure in the Zelensky video 'did not exist in front of the camera.'

His face is:

Data learned from thousands of photos

Composited onto a completely different person's body

What light captured is not 'reality' but 'computation'

The new chain: Data sets → AI computation → Fake face

No causal connection. No physical evidence. Indexicality destroyed.

*Visual concept map comparing:

TRADITIONAL: Physical Reality → Light Trace → Film Image ("That-has-been")

DEEPFAKE: Data Sets → AI Compute → Fake Face ("That-never-was") The gap: Light captured ≠ Reality existed*

Part 3: The Crisis of Trust - When We Can No Longer Believe Our Eyes

The MIT Study: Barely Better Than Coin Toss

2023 MIT Media Lab Study: Average person's deepfake detection accuracy: 65%

For context:

Coin toss: 50%

Average person: 65%

Margin: +15%

Slightly better than random guessing.

The "Few Seconds" That Changed Everything

Tom Cruise deepfake creator Chris Ume: "My goal is to make people pause for a few seconds wondering 'Is it real? Is it fake?'"

Those 'few seconds' are the core of the problem.

Before deepfakes:

See photo → Believe immediately

Instant trust

After deepfakes:

See photo → Pause → Question → Analyze → Maybe believe

Trust delayed, possibly denied

*Statistical visualization showing:

Comparison box showing coin toss (50%) vs average person (65%) = only +15% margin*

We Can No Longer Immediately Trust Photographs

Episode 2 Photoshop: Changes can be tracked, compared against original Deepfake: The original itself doesn't exist

There's no reference point to compare against.

The photograph still exists. But the presumption of truth is gone.

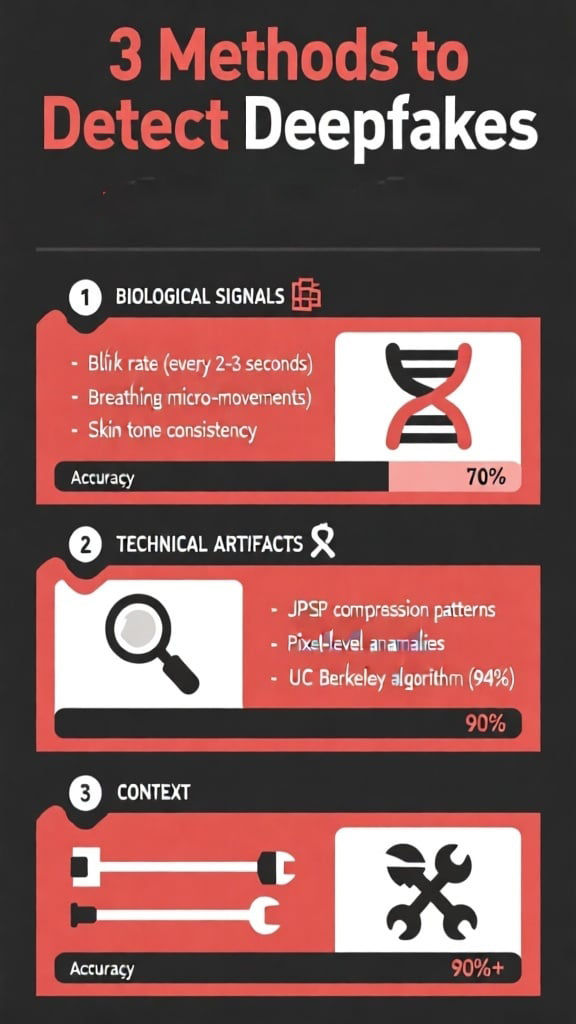

The Limits of Detection: Three Methods

Method 1: Biological Inconsistencies

Blinking patterns (every 2-3 seconds)

Subtle movements from breathing

Naturalness of skin tone changes

Accuracy: ~70%

Method 2: Technical Artifacts

JPEG compression pattern analysis

Pixel-level anomalies

UC Berkeley algorithm: 94% accuracy

Accuracy: 90%+

Method 3: Metadata Verification

EXIF data analysis

Source cross-checking

Timeline consistency

Accuracy: Variable (easily forged)

Visual checklist showing the three detection methods with their accuracy rates and key techniques, plus "THE FUNDAMENTAL PROBLEM" box showing the endless race: Detection improves → Generation also improves → ∞ RACE

The Fundamental Problem: An Endless Arms Race

Detection algorithms advance → Generation algorithms also evolve

MIT Professor Andrew Owens: "Deepfake detection is like antivirus software. What works today may not work tomorrow."

Technology vs technology. An endless race with no finish line.

Every breakthrough in detection Becomes the training data for the next generation of fakes.

Part 4: What Should Photographers Do? - Two Paths Forward

The Same Question, Different Contexts

Zelensky deepfake: Crude, quickly exposed

TAMBURINS case: Technically perfect, legitimate replacement

One is manipulation, one is legitimate replacement.

But both ask the same question:

Does a face in a photograph still prove that person existed there?

Revisiting the 3-Layer Structure (Episode 1)

Layer 1: Physical reality Layer 2: Recording of light Layer 3: Social interpretation

Deepfake Destroys Layer 2

The recording of light is no longer connected to reality.

If Layer 2 is broken, Can Layers 1 and 3 maintain photography's meaning?

Two possible responses:

Response 1: Record More Context (Transparency)

Not just a single image, but disclose the entire production process:

What to provide:

RAW files

On-location video footage

Complete metadata

Shooting contracts

Behind-the-scenes documentation

Who's doing this:

BBC, Reuters introduced 'verifiable photography' protocols

Photojournalism organizations require process documentation

The new equation: Photograph + Evidence of process = Trust

Photographers must now provide both results + evidence of trust.

Response 2: Embrace Imperfection (Authenticity)

Deepfake pursues: Perfection, consistency, calculation Photographer captures: Contingency, mistakes, unpredictable moments

The 'decisive moment' from Episode 1 gains meaning again.

What AI can do:

Calculate probability

Optimize composition

Perfect symmetry

Eliminate flaws

What AI cannot do:

Capture pure chance

Predict the unpredictable

Embrace meaningful mistakes

Feel the unrepeatable moment

The photographer's advantage: Being there. In that unrepeatable moment. With all its imperfections.

Two-path diagram showing: PATH 1: MORE CONTEXT (Transparency) - RAW files, footage, metadata, contracts, verification protocols PATH 2: EMBRACE IMPERFECTION (Authenticity) - Contingency, mistakes, unpredictable moments, the decisive moment RESULT: Photography as Evidence + Attitude

Epilogue: In Front of the Billboard

In front of the TAMBURINS billboard. What are the tourists who once photographed Jennie now photographing?

Is that photo still a 'commemoration'?

The hundreds of photos we upload to social media:

Evidence that we truly 'existed'?

Or a combination of how we 'wanted to exist'?

The Next Question

Deepfakes attack photography's indexicality. But this is only the beginning.

As of 2024: Image-generating AI creates scenes that never existed at all.

Deepfake: Deceives about 'whose face it is'

Generative AI: Makes the question itself 'did this exist?' meaningless

The copyright battles surrounding those generated images. Now unfolding in courtrooms around the world.

Primary Sources

BBC News, "Deepfake presidents used in Russia-Ukraine war", 2022

MIT Media Lab, "Deepfake Detection Accuracy Study", 2023

WIRED, "A Zelensky Deepfake Was Quickly Defeated", 2022

UC Berkeley, "JPEG Artifact Analysis for Deepfake Detection", 2023

Field photography: TAMBURINS store, Garosu-gil, Seoul (2024)

[Episode 1: What Is Photography?] [Episode 2: The Crisis of Authenticity] → Episode 3: Deepfake - Stealing Faces (Current) [Episode 4: The Great Generation] (Next)

This series explores photography in the digital image era from philosophical, technical, and social perspectives.

Comments